Creating RAID0

Creating and failing RAID1 (w/o spare disk)

Creating and failing RAID1 (w/ spare disk)

Creating and failing RAID01

Creating and failing RAID10

As you can see I'll describe the main RAID levels, nothing special. All topics are very similar in their description, just pick one that interest you and there you go. I don't use real disks, just normal empty files which I setup as block devices with losetup. I won't explain the RAID levels here, I assume you know them already. As usual I'm using Slackware here.

The second part can be found here.

Creating RAID0

For a RAID0 you need at minimum one disk, better are two. To create the files use dd:

# dd if=/dev/zero of=/local/disks/vda bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdb bs=1 count=0 seek=134217728

The two commands above will create two empty files with each 128 MB in size. Next use losetup to attach the files as loop devices:

# losetup /dev/loop0 /local/disks/vda

# losetup /dev/loop1 /local/disks/vdb

Check that the loop devices are available:

# losetup -a

/dev/loop0: [0811]:262146 (/local/disks/vda)

/dev/loop1: [0811]:262147 (/local/disks/vdb)

With the two loop devices you can create a RAID0 softraid now:

# mdadm --create /dev/md0 --level=raid0 --raid-device=2 /dev/loop0 /dev/loop1

...

mdadm: array /dev/md0 started.

Check that the softraid device md0 is setup:

# cat /proc/mdstat

...

md0 : active raid0 loop1[1] loop0[0]

260096 blocks super 1.2 512k chunks

...

And check the capacity of /dev/md0

# fdisk -l /dev/md0

Disk /dev/md0: 266 MB, 266338304 bytes

...

This looks good for a RAID0. You can use the device /dev/md0 now to create your partitions etc.

Creating and failing RAID1 (w/o spare disk)

For a RAID1 you need at minimum 2 disks (for a RAID1 one disk would be fine, I will explain it in my RADI10 article). To create the files use dd:

# dd if=/dev/zero of=/local/disks/vda bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdb bs=1 count=0 seek=134217728

The two commands above will create two empty files with each 128 MB in size. Next use losetup to attach the files as loop devices:

# losetup /dev/loop0 /local/disks/vda

# losetup /dev/loop1 /local/disks/vdb

Check that the loop devices are available:

# losetup -a

/dev/loop0: [0811]:262146 (/local/disks/vda)

/dev/loop1: [0811]:262147 (/local/disks/vdb)

With the two loop devices you can create a RAID1 softraid now:

# mdadm --create /dev/md0 --level=raid1 --raid-device=2 /dev/loop0 /dev/loop1

...

mdadm: array /dev/md0 started.

Check that the softraid device md0 is setup:

# cat /proc/mdstat

...

md0 : active raid1 loop1[1] loop0[0]

131060 blocks super 1.2 [2/2] [UU]

...

And check the capacity of /dev/md0

# fdisk -l /dev/md0

Disk /dev/md0: 134 MB, 134205440 bytes

...

This looks good for a RAID1. You can use the device /dev/md0 now to create your partitions etc.

But what happens if a disk fails? To simulate a failure, mark any disk as failed (you need to mark a disk as failed in a real environment too before you can substitute it):

# mdadm /dev/md0 --fail /dev/loop0

mdadm: set /dev/loop0 faulty in /dev/md0

# cat /proc/mdstat

...

md0 : active raid1 loop1[1] loop0[0](F)

131060 blocks super 1.2 [2/1] [_U]

...

The loop0 device in the output of mdstat is now marked with (F) flag.

Next remove the disk from the array:

# mdadm /dev/md0 --remove /dev/loop0

mdadm: hot removed /dev/loop0 from /dev/md0

Before you can add a new disk, you need to create one more disk:

# dd if=/dev/zero of=/local/disks/vdc bs=1 count=0 seek=134217728

# losetup /dev/loop2 /local/disks/vdc

# losetup -a

...

/dev/loop2: [0811]:262150 (/local/disks/vdc)

Finally add the new disk to the array:

# mdadm /dev/md0 --add /dev/loop2

mdadm: added /dev/loop2

If you check mdstat now you'll see the recovery process of the new disk:

# cat /proc/mdstat

...

md0 : active raid1 loop2[2] loop1[1]

131060 blocks super 1.2 [2/1] [_U]

[===============>.....] recovery = 75.0% (98368/131060) finish=0.0min speed=32789K/sec

...

After a while the recovery is complete and /dev/loop2 is a part of the array:

# cat /proc/mdstat

...

md0 : active raid1 loop2[2] loop1[1]

131060 blocks super 1.2 [2/2] [UU]

...

Creating and failing RAID1 (w/ spare disk)

For a RAID1 w/ spare disk you need at minimum 3 disk (for a RAID1 one disk would be fine, I will explain it in my RADI10 article). To create the files use dd:

# dd if=/dev/zero of=/local/disks/vda bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdb bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdc bs=1 count=0 seek=134217728

The two commands above will create two empty files with each 128 MB in size. Next use losetup to attach the files as loop devices:

# losetup /dev/loop0 /local/disks/vda

# losetup /dev/loop1 /local/disks/vdb

# losetup /dev/loop2 /local/disks/vdc

Check that the loop devices are available:

# losetup -a

/dev/loop0: [0803]:7605014 (/local/disks/vda)

/dev/loop1: [0803]:7605015 (/local/disks/vdb)

/dev/loop2: [0803]:7605016 (/local/disks/vdc)

With the three loop devices you can create a RAID1 softraid with one spare disk now:

# mdadm --create /dev/md0 --level=raid1 --raid-device=2 /dev/loop0 /dev/loop1 --spare-disk=1 /dev/loop2

...

mdadm: array /dev/md0 started.

Check that the softraid device md0 is setup:

# cat /proc/mdstat

...

md0 : active raid1 loop2[2](S) loop1[1] loop0[0]

131008 blocks [2/2] [UU]

...

And check the capacity of /dev/md0

# fdisk -l /dev/md0

Disk /dev/md0: 134 MB, 134152192 bytes

...

This looks good for a RAID1 with one spare disk. You can use the device /dev/md0 now to create your partitions etc.

But what happens if a disk fails? To simulate a failure, mark any disk as failed (you need to mark a disk as failed in a real environment too before you can substitute it):

# mdadm /dev/md0 --fail /dev/loop0

mdadm: set /dev/loop0 faulty in /dev/md0

# cat /proc/mdstat

...

md0 : active raid1 loop2[2] loop1[1] loop0[3](F)

131008 blocks [2/1] [_U]

[===========>.........] recovery = 56.2% (73728/131008) finish=0.0min speed=36864K/sec

...

After one disk was marked as faulty, the recovery process starts using the prior defined spare disk.

The faulty disk can be substituted as usual.

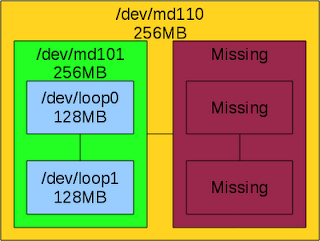

Creating and failing RAID01

One thing: if you think about using RAID01, then use RAID10 directly. This topic is for demonstration only. For a RAID01 you need four disks. To create the files use dd:

# dd if=/dev/zero of=/local/disks/vda bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdb bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdc bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdd bs=1 count=0 seek=134217728

The four commands above will create four empty files with each 128 MB in size. Next use losetup to attach the files as loop devices:

# losetup /dev/loop0 /local/disks/vda

# losetup /dev/loop1 /local/disks/vdb

# losetup /dev/loop2 /local/disks/vdc

# losetup /dev/loop3 /local/disks/vdd

Check that the loop devices are available:

# losetup -a

/dev/loop0: [0811]:262146 (/local/disks/vda)

/dev/loop1: [0811]:262147 (/local/disks/vdb)

/dev/loop2: [0811]:262148 (/local/disks/vdc)

/dev/loop3: [0811]:262149 (/local/disks/vdd)

With the four loop devices you can create two RAID0 softraid now:

# mdadm --create /dev/md101 --level=raid0 --raid-device=2 /dev/loop0 /dev/loop1

...

mdadm: array /dev/md101 started.

# mdadm --create /dev/md102 --level=raid0 --raid-device=2 /dev/loop2 /dev/loop3

...

mdadm: array /dev/md102 started.

Check that the softraid device md101 and /dev/md102 are set up:

# cat /proc/mdstat

...

md102 : active raid0 loop3[1] loop2[0]

260096 blocks super 1.2 512k chunks

md101 : active raid0 loop1[1] loop0[0]

260096 blocks super 1.2 512k chunks

...

And check the capacity of /dev/md101 and /dev/md102

# fdisk -l /dev/md101

Disk /dev/md101: 266 MB, 266338304 bytes

...

# fdisk -l /dev/md102

Disk /dev/md102: 266 MB, 266338304 bytes

...

This looks good for the RAID0 arrays.

Across the two RAID0 arrays create a new RAID1 array now:

# mdadm --create /dev/md110 --level=raid1 --raid-device=2 /dev/md101 /dev/md102

...

mdadm: array /dev/md110 started.

Take a look at /proc/mdstat:

# cat /proc/mdstat

...

md110 : active raid1 md102[1] md101[0]

260084 blocks super 1.2 [2/2] [UU]

md102 : active raid0 loop3[1] loop2[0]

260096 blocks super 1.2 512k chunks

md101 : active raid0 loop1[1] loop0[0]

260096 blocks super 1.2 512k chunks

...

Check the capacity:

# fdisk -l /dev/md110

Disk /dev/md110: 266 MB, 266326016 bytes

...

You can use the device /dev/md110 now to create your partitions etc.

But what happens if a disk fails? To simulate a failure, mark any disk as failed (you need to mark a disk as failed in a real environment too before you can substitute it):

# mdadm /dev/md102 --fail /dev/loop3

mdadm: hot remove failed for /dev/loop3: Device or resource busy

As you can see the disk won't mark as fault. This has a simple reason: before you can substitute a disk within a RAID01 array you have to mark the RAID0 array (which contains the failed disk) as fault and remove the array:

# mdadm /dev/md110 --fail /dev/md102

mdadm: set /dev/md102 faulty in /dev/md110

# mdadm /dev/md110 --remove /dev/md102

mdadm: hot removed /dev/md102 from /dev/md110

Now stop the RAID0 array:

# mdadm --stop /dev/md102

mdadm: stopped /dev/md102

Check that the array md102 was removed from /proc/mdstat:

# cat /proc/mdstat

...

md110 : active raid1 md101[0]

260084 blocks super 1.2 [2/1] [U_]

md101 : active raid0 loop1[1] loop0[0]

260096 blocks super 1.2 512k chunks

...

This has to be done because you can not substitute a disk within a RAID0 array.

The recreate the RAID01 array you need to create a new RAID0 array again. Before you continue create a new disk as a substitue for loop3:

# dd if=/dev/zero of=/local/disks/vde bs=1 count=0 seek=134217728

# losetup /dev/loop4 /local/disks/vde

# losetup -a

...

/dev/loop4: [0811]:262150 (/local/disks/vde)

Now recreate the RAID0 array with the new disk:

# mdadm --create /dev/md102 --level=raid0 --raid-device=2 /dev/loop2 /dev/loop4

...

mdadm: array /dev/md102 started.

And check /proc/mdstat:

# cat /proc/mdstat

...

md102 : active raid0 loop4[1] loop2[0]

260096 blocks super 1.2 512k chunks

md110 : active raid1 md101[0]

260084 blocks super 1.2 [2/1] [U_]

md101 : active raid0 loop1[1] loop0[0]

260096 blocks super 1.2 512k chunks

...

Now add the recreated RAID0 array with new disk to the RAID1 array again:

# mdadm /dev/md110 --add /dev/md102

mdadm: re-added /dev/md102

And check /proc/mdstat for the recovery process:

# cat /proc/mdstat

...

md102 : active raid0 loop4[1] loop2[0]

260096 blocks super 1.2 512k chunks

md110 : active raid1 md102[1] md101[0]

260084 blocks super 1.2 [2/1] [U_]

[===============>.....] recovery = 77.5% (201844/260084) finish=0.0min speed=33640K/sec

md101 : active raid0 loop1[1] loop0[0]

260096 blocks super 1.2 512k chunks

...

The RAID01 Array is healthy again:

As mentioned before try to avoid to use RAID01 at any chance, better use RAID10 directly.

Creating and failing RAID10

For a RAID10 array you need at least four disks (you don't but it is more fun). To create the files use dd:

# dd if=/dev/zero of=/local/disks/vda bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdb bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdc bs=1 count=0 seek=134217728

# dd if=/dev/zero of=/local/disks/vdd bs=1 count=0 seek=134217728

The four commands above will create four empty files with each 128 MB in size. Next use losetup to attach the files as loop devices:

# losetup /dev/loop0 /local/disks/vda

# losetup /dev/loop1 /local/disks/vdb

# losetup /dev/loop2 /local/disks/vdc

# losetup /dev/loop3 /local/disks/vdd

Check that the loop devices are available:

# losetup -a

/dev/loop0: [0811]:262146 (/local/disks/vda)

/dev/loop1: [0811]:262147 (/local/disks/vdb)

/dev/loop2: [0811]:262148 (/local/disks/vdc)

/dev/loop3: [0811]:262149 (/local/disks/vdd)

With the four loop devices you can create the RAID10 array now:

# mdadm --create /dev/md101 --level=raid10 --raid-device=4 /dev/loop0 /dev/loop1 /dev/loop2 /dev/loop3

...

mdadm: array /dev/md101 started.

# cat /proc/mdstat

...

md101 : active raid10 loop3[3] loop2[2] loop1[1] loop0[0]

262016 blocks 64K chunks 2 near-copies [4/4] [UUUU]

...

You can set up a RAID10 with missing disks like this:

# mdadm --create /dev/md101 --level=raid10 --raid-device=4 /dev/loop0 missing /dev/loop2 missing

...

In this case /dev/loop0 and /dev/loop2 are each one side of two mirrors, the other sides of the mirrors are missing. It not possible to set up a RAID10 with a complete missing mirror like this:

# mdadm --create /dev/md101 --level=raid10 --raid-device=4 /dev/loop0 /dev/loop1 missing missing

Continue creating array? y

mdadm: RUN_ARRAY failed: Input/output error

mdadm: stopped /dev/md101

When you have set up the RAID10 array then check the capacity of /dev/md101:

# fdisk -l /dev/md101

Disk /dev/md101: 268 MB, 268304384 bytes

...

This looks good for the RAID10 array. You can use the device /dev/md101 now to create your partitions etc. But what happens if a disk fails? To simulate a failure, mark any disk as faulted in the RAID10 array (you need to mark a disk as failed in a real environment too before you can substitute it):

# mdadm /dev/md101 --fail /dev/loop2

mdadm: set /dev/loop2 faulty in /dev/md101

And remove it:

# mdadm /dev/md101 --remove /dev/loop2

mdadm: hot removed /dev/loop2

Then create a new disk:

# dd if=/dev/zero of=/local/disks/vde bs=1 count=0 seek=134217728

# losetup /dev/loop4 /local/disks/vde

# losetup -a

...

/dev/loop4: [0811]:262150 (/local/disks/vde)

And add it to the RAID10 array md101:

# mdadm /dev/md101 --add /dev/loop4

mdadm: added /dev/loop4

And check /proc/mdstat for the recovery process:

# cat /proc/mdstat

...

md101 : active raid10 loop4[4] loop3[3] loop1[1] loop0[0]

262016 blocks 64K chunks 2 near-copies [4/3] [UU_U]

[==========>..........] recovery = 53.5% (70528/131008) finish=0.0min speed=35264K/sec

...

When the recovery process has finished then your RAID10 array is healthy again.

No comments:

Post a Comment